Kubernetes

What's it?

Kubernetes, often abbreviated as K8s (because there are 8 letters between "K" and "s" in Kubernetes), is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF), which is a part of the Linux Foundation. Kubernetes is widely used for managing containerized applications in complex and dynamic environments.

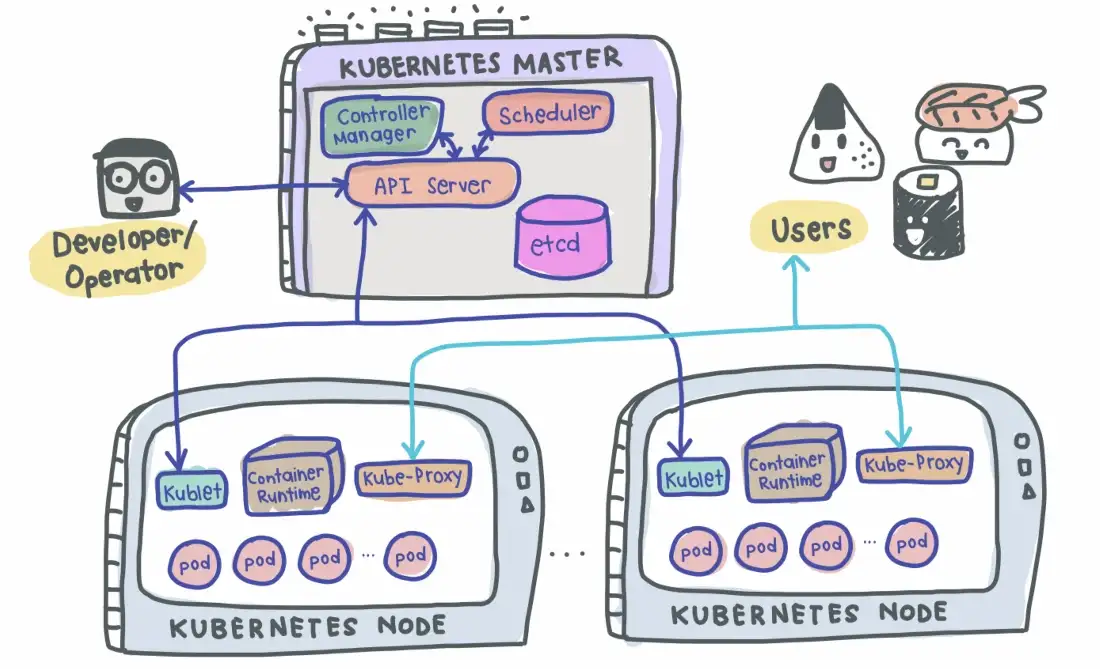

Here's a breakdown of the key concepts and components within Kubernetes:

Cluster

At the highest level, a Kubernetes cluster is a collection of resources for running containerized applications. A cluster is a set of nodes that work together to manage containerized applications. It provides scalability, high availability, and fault tolerance. It consists of multiple nodes (machines) that work together to form a cohesive environment. A cluster can have either a master node (control plane) or worker node.

Node

A node is a physical or virtual machine that runs the Kubernetes software and hosts containers. Each node is part of a larger cluster.

Master Node

The master node is the control plane of the Kubernetes cluster. It manages the overall state of the cluster, scheduling applications, scaling resources, and handling communication with nodes. A master node contains the following components:

-

API Server: The API server provides a RESTful interface for users and other components to interact with the cluster. It processes requests to create, update, or query resources and enforces these changes by interacting with the etcd datastore.

-

etcd: etcd is a distributed key-value store that acts as the central datastore for the cluster. It stores configuration data, resource definitions, and the current state of the cluster. The etcd cluster is highly available and ensures consistency in the cluster's state.

-

Controller Manager: The controller manager is responsible for managing various controllers that regulate the desired state of the cluster. These controllers include replication controllers, deployment controllers, statefulset controllers, and more. They ensure that the actual state of the cluster matches the desired state defined in the resource configurations.

-

Scheduler: The scheduler is responsible for assigning Pods to nodes in the cluster based on resource requirements, node availability, and other constraints. It ensures efficient resource utilization and maintains a balanced workload distribution.

-

Cloud Controller Manager (Optional): If the cluster is deployed in a cloud environment, the cloud controller manager interacts with the cloud provider's APIs to manage resources such as load balancers, virtual machines, and storage volumes.

Worker Node

Worker nodes, also known as minion nodes, are the machines where containers are actually deployed and run. They communicate with the master node and execute the tasks assigned by the master. It contains the following components:

-

Kubelet: The kubelet runs on each node and is responsible for ensuring that containers are running in Pods as specified in their corresponding Pod definitions. It communicates with the control plane to fetch Pod specifications, manage container lifecycle, and report the node's status.

-

Kube Proxy: Kube Proxy runs on each node and is responsible for maintaining network rules to enable communication between Pods and services within the cluster. It helps expose services to the network and performs load balancing.

-

Container Runtime: The container runtime, such as Docker or containerd, runs on each node and is responsible for pulling container images, creating and managing containers, and ensuring their isolation and resource allocation.

-

Pod: A pod is the smallest deployable unit in Kubernetes. It represents a single instance of a running process within a cluster. Pods can contain one or more containers that share the same network and storage resources.

Namespace

Namespaces are virtual clusters within a physical cluster. They allow you to create isolated environments within the same Kubernetes cluster, helping to organize and manage resources more effectively.

Managing and Controlling K8s Cluster

In Kubernetes, a resource refers to a declarative representation of a system object that you want to create or manage within the cluster. Resources are defined using YAML (or JSON) manifests and can represent various components, configurations, and functionalities that make up your application or system.

Here are a few common types of Kubernetes resources:

-

Pod: The smallest deployable unit in Kubernetes. It can hold one or more containers and is used to group tightly coupled application components.

-

Deployment: Manages the deployment and scaling of a set of identical Pods, ensuring that the desired number of replicas are maintained at all times.

-

Service: Provides networking and load balancing for a set of Pods, allowing them to communicate with each other or with external clients.

-

ConfigMap: Stores configuration data as key-value pairs, which can be used as environment variables or as configuration files mounted inside containers.

-

Secret: Similar to ConfigMaps, but specifically designed to store sensitive data such as passwords, tokens, or API keys.

-

StatefulSet: Manages the deployment of stateful applications by ensuring stable network identities and maintaining ordered scaling and rolling updates.

-

PersistentVolumeClaim (PVC): Represents a request for storage by a user or a Pod. PVCs are used with PersistentVolumes to manage storage resources.

-

Namespace: A virtual cluster within a Kubernetes cluster that allows you to partition resources, providing isolation and better organization.

-

Job: Manages the execution of a task or a batch job, ensuring that it completes successfully. It's useful for tasks like data processing or periodic jobs.

-

CronJob: Similar to a Job, but it's scheduled to run at specified intervals using a cron-like syntax.

These resources are defined using YAML files that describe their properties, configurations, and desired states. When you apply these manifest files to the Kubernetes cluster using the kubectl apply command, the Kubernetes control plane takes care of ensuring that the actual state of the resources matches the desired state defined in the manifests. This declarative approach simplifies the management and automation of complex containerized applications in the Kubernetes environment.

Here's an example of running a basic Deployment resource in Kubernetes:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-basic-deployment

spec:

replicas: 2

selector:

matchLabels:

app: my-basic-app

template:

metadata:

labels:

app: my-basic-app

spec:

containers:

- name: app-container

image: nginx:latest

ports:

- containerPort: 80

Explanation:

- This YAML manifest defines a Deployment resource that manages the deployment of a simple application using the NGINX Docker image.

replicas: 2specifies that you want to run 2 replicas (instances) of the application.selectorspecifies the labels used to identify the Pods managed by this Deployment.- The

templatesection defines the Pod template for the Deployment. - Inside the Pod template, the

containerssection specifies a single container namedapp-containerusing the NGINX Docker image from the latest tag. - The container listens on port 80, which is the default port for the NGINX web server.

To deploy this example, save the YAML content to a file (e.g., basic-deployment.yaml), and then use the kubectl apply command to apply it to your Kubernetes cluster:

kubectl apply -f basic-deployment.yaml

This will create the specified Deployment and start running two instances of the NGINX web server. You can verify the status of the deployment using kubectl get deployments and check the running Pods using kubectl get pods.

Keep in mind that this is a minimal example, and in real-world scenarios, you might need to customize the configuration, add environment variables, specify resources, and more based on your application's requirements.

Helm

What's Helm

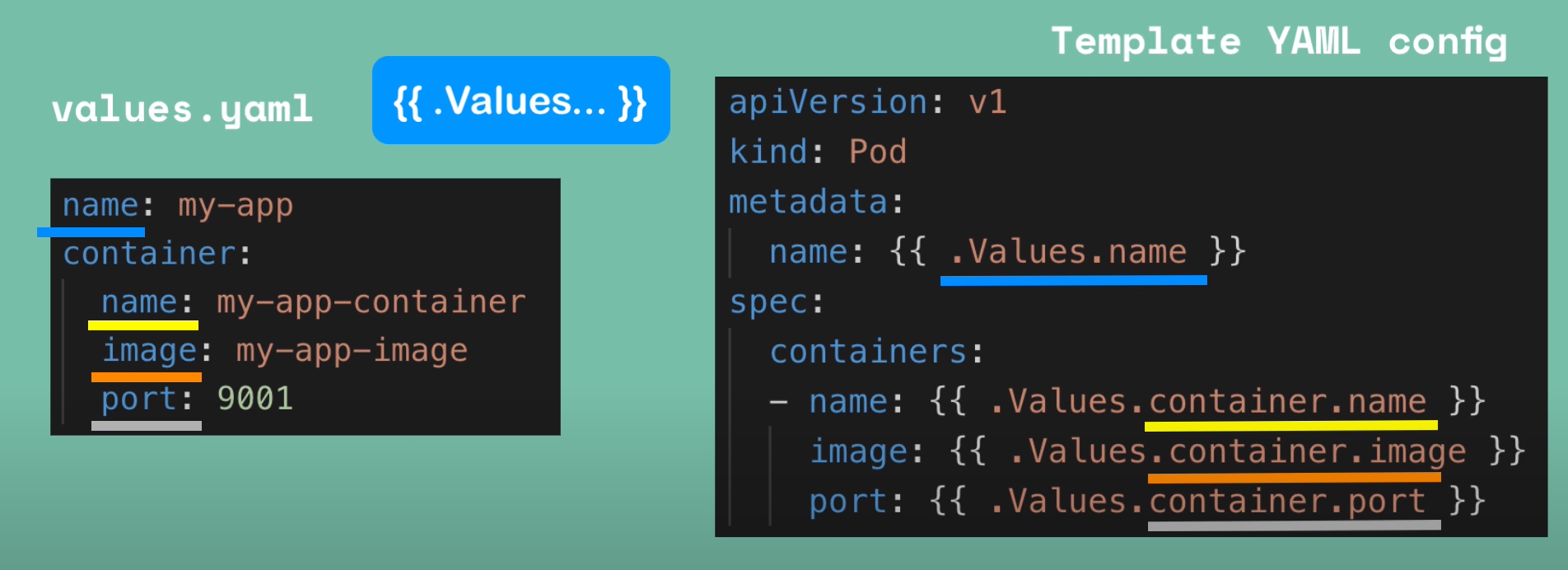

Helm is a package manager for Kubernetes that simplifies the deployment and management of applications using pre-defined templates called charts. A chart packages all the resources required for an application, including deployments, services, and configurations. Helm allows for parameterization and customization of charts, streamlining the deployment process. It enables versioned releases, easy rollbacks, and sharing of application configurations as code. Helm promotes best practices by encouraging reusable and modular application definitions, making it easier to manage complex applications on Kubernetes.

Helm Chart

A Helm chart is a package of pre-configured Kubernetes resources. It provides a way to define, install, and manage the configuration of Kubernetes applications. Helm charts are written in YAML and are composed of metadata, default values for configuration options, and a template for defining the Kubernetes resources that make up the application.

Helm charts can be used to deploy applications on any Kubernetes cluster, making it easy to reproduce and share deployments across teams and organizations.

Tilt Development Environment

What is Tilt?

Tilt is a development tool that enhances the local development experience for Kubernetes applications. It streamlines the iterative development process by automating the build and deployment steps. Tilt monitors code changes and automatically rebuilds and deploys application components into a local or remote Kubernetes cluster. This rapid feedback loop reduces the need for manual intervention and accelerates development cycles. Tilt also provides a real-time dashboard that visualizes application components, logs, and resources, aiding developers in diagnosing issues and collaborating effectively.

Features that we use

- Read infra from

Tiltfile. - Build apps(images) from local dir, build follow its

Dockerfile. - Read

Helm chartand patch chart's default values with local values to generate k8s manifests for own dev environment. - Replace apps on the manifests with the apps which its build then deploy it to

local kubernetes cluster. - Additional k8s resource can be added from

Tiltitself, by create a manifest and tell Tilt onTiltfile.